AI Supply Chain Report

Use the AI Supply Chain report to monitor and control end user access to AI model files that can pose AI supply chain security and compliance risks.

Secure Access can identify and block end user attempts to download AI model files developed or authored by prohibited suppliers, released under a copyleft license, or capable of executing arbitrary code.

For more information, see Foundation AI: Robust Intelligence for Cybersecurity and Cisco AI Supply Chain Risk Management.

Prerequisites

A security profile for internet access with both Decryption and AI Supply Chain Blocking enabled. For more information, see Security Profiles for Internet Access.

At least one internet access rule with a security profile for internet access with AI Supply Chain Blocking enabled. For more information, see Configure Security Profiles for Internet Access.

Procedure

Navigate to Monitor > AI Supply Chain.

The AI Supply Chain dashboard shows information about AI model risk monitoring and model file download attempts by your end users.

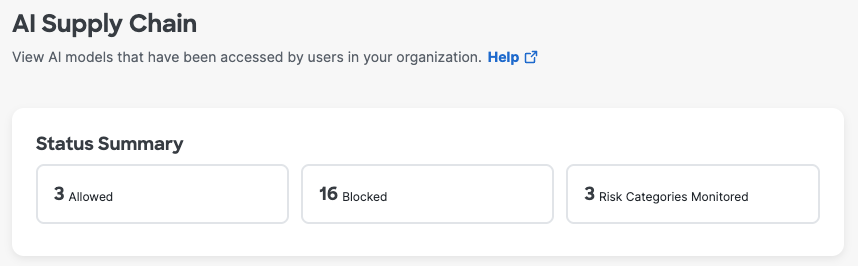

Status Summary shows the total number of AI model downloads allowed and blocked in your organization and the number of risk categories that you are monitoring.

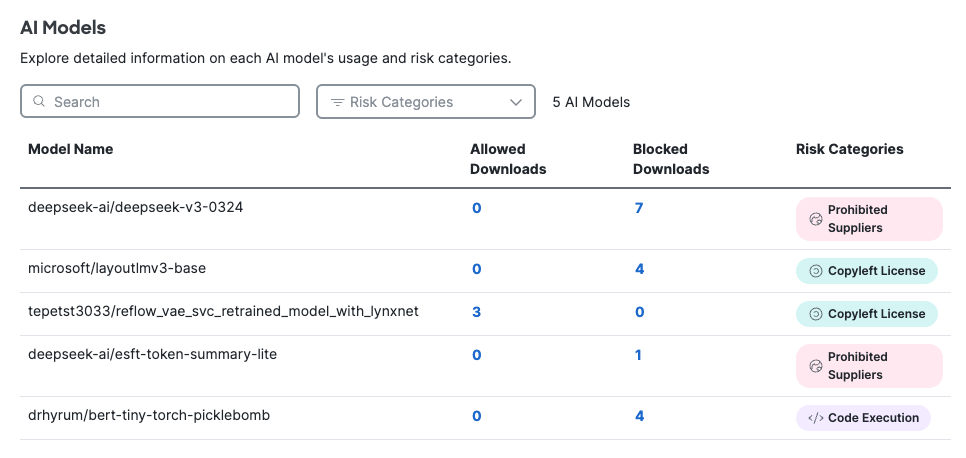

Search the AI Models table by model name or filter by risk category. The AI Models table shows:

- Model Name—The unique identifier of an AI model.

- Number of Allowed and Blocked Downloads—Click the numbers in the table to view details in the Activity Search report about allowed or blocked attempts to download files identified as related to this AI model. For more information, see Activity Search Report.

- Risk Categories—Each model name will be associated with one or more of the three risk categories provided by Foundation AI: Prohibited Suppliers, Copyleft License, and Code Execution.

More about Risk Categories Provided by Cisco Foundation AI

- Prohibited Suppliers—AI models developed or authored by prohibited suppliers may present risks related to national security, data privacy, and influence operations. Depending on the development and deployment context, models in this category may be subject to regulatory oversight, data access laws, or government influence that raises concerns about embedded backdoors, surveillance capabilities or biased outputs shaped by geopolitical agendas.

- Copyleft License—AI models released under copyleft licenses can introduce legal and operational risks due to restrictive licensing terms. Copyleft licenses may require that derivative work, deployment dependent on the model, or indirect use must be released under the same license. This may present risks related to compliance with disclosure requirements of the license, legal exposure due to violation of license terms, and constraints on how the model can be integrated, scaled, or monetized.

- Code Execution—AI models capable of executing arbitrary code create security and reliability risks. If a model can generate and execute code dynamically, it may inadvertently or maliciously run harmful operations, access sensitive data, or interact with systems in unintended ways. A model's ability to execute arbitrary code bridges the gap between text generation and real-world impact, making it possible for a model to cause damage ranging from data breaches to system compromise.

Export Admin Audit Log Report to an S3 Bucket < AI Supply Chain Report > Monitor Secure Access with Alert Rules

Updated 20 days ago